The elasticity of identity identification

Client:

Department for

Work & Pensions (DWP)

Area:

Identity & Trust

Role:

Senior Identity & Trust Specialist

The problem

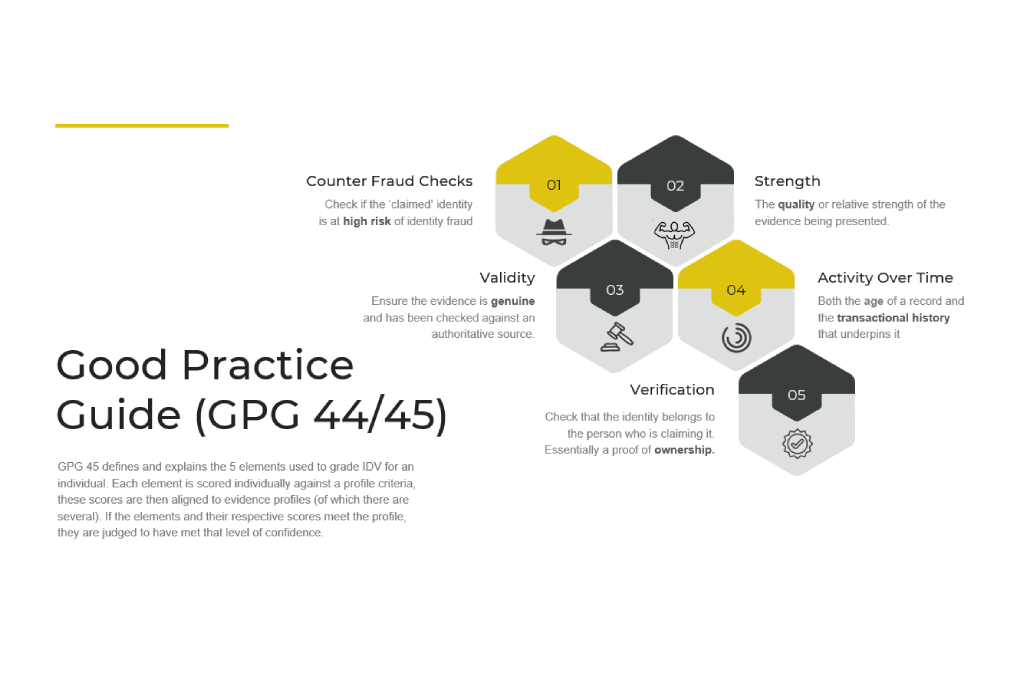

In 2012 GDS published its series of Good Practice Guides (GPGs), for providers of identity assurance for government services:

- GPG 43: Requirements for Secure Delivery of Online Public Services (RSDOPS)

- GPG 44: Authentication Credentials in Support of HMG Online Services

- GPG 45: Validating and Verifying the Identity of an Individual in Support of HMG Online Services

In my role as both a Product Manager and later as an Identity & Trust Specialist in Policy, these became critical documents for me to understand, reference and articulate to others.

However, GPG 45 alone weighs in at 33 pages (8972 words), including a 29 page sub section with 32 individual ID profiles presented as tabular data.

So I think it’s fair to say these were not the most accessible reads. Even by their own admission (via the Cabinet Office website) they recognised that “these guides are necessarily quite technical”.

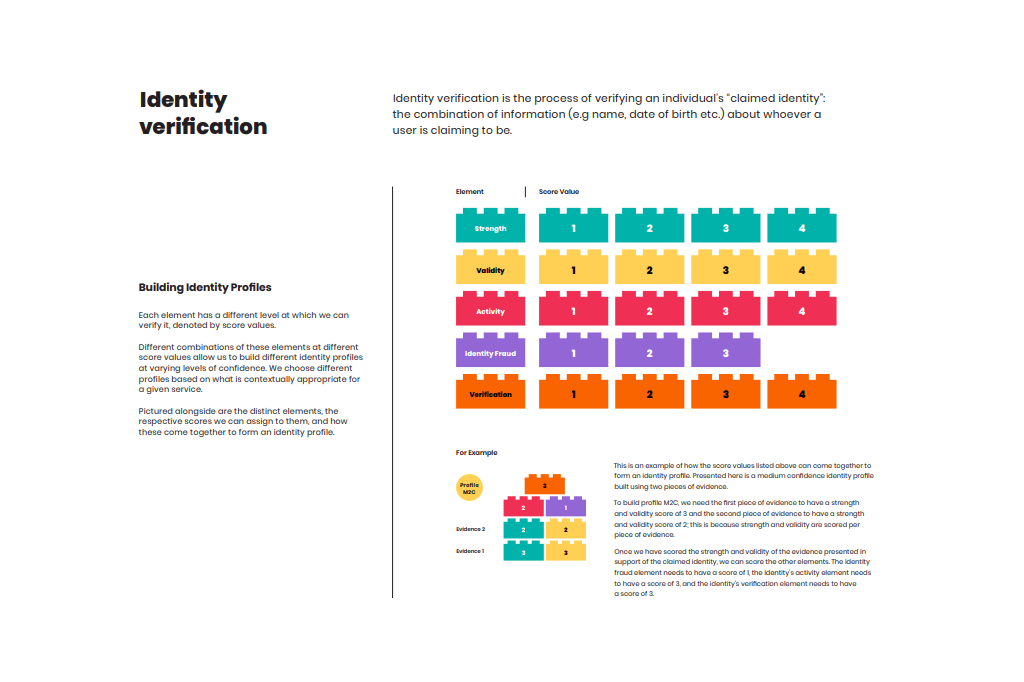

I had already seen a couple attempts to re-frame this information, for example the digital consultancy Hippo, who opted for a Lego bricks analogy (see below).

But this interpretation always seemed far too rigid to me, especially when dealing with a concept as fluid as identity. Whilst I could appreciate the bricks were meant to be interchangeable, I couldn’t shake the feeling that this subject area should be more about depth than height.

What did I do about it?

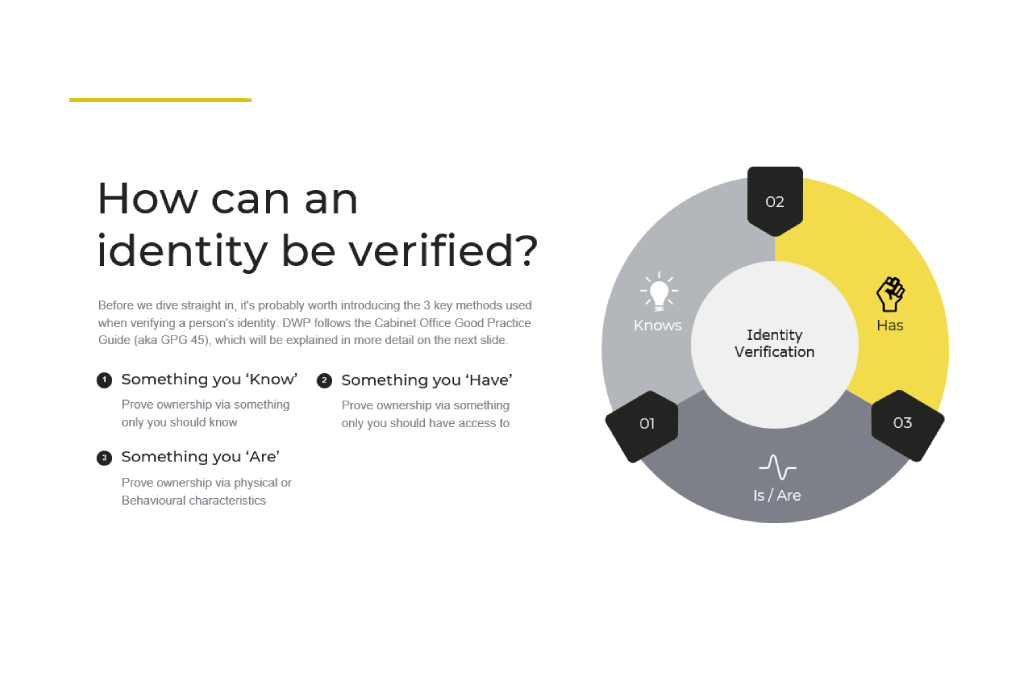

I started by moving away from the heavy detail and instead focused my attention on creating a framework that could literally ‘flex’. After all, an identity can be challenged in a variety of ways, for a variety of reasons, across a variety of different channels.

My focus was less about how to ‘achieve’ a certain score and more about why that score was ‘appropriate’ or ‘best suited’ to that specific scenario.

I also wanted to pay particular attention to language, since my early research showed this was the number one reason for stakeholder assumptions. The difference between verification, validation, and authentication, for example, was a recurring and lengthy bone of contention.

To address these unnecessary distractions I created a couple of one pagers, which sought to cut through a lot of the noise, acting as anchors for discussions and stakeholder alignment.

With these footholds in place, I was able to return to my goal of finding a framework that flexed.

In the interests of ‘working in the open’, I’ve managed to find some of my original notes and general musings from the numerous meetings I arranged during this time.

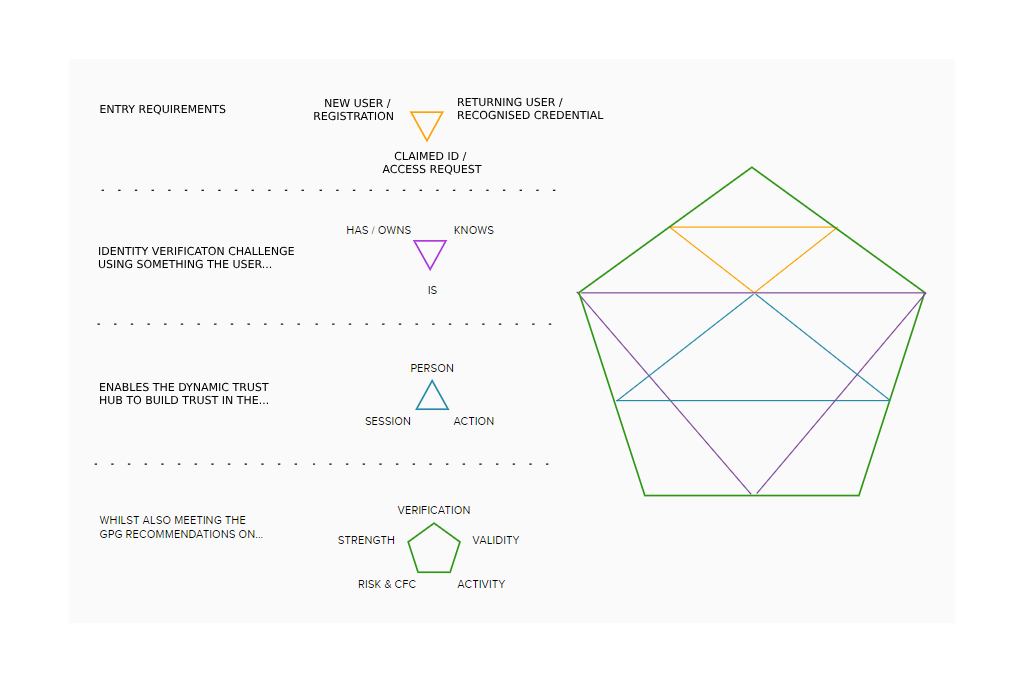

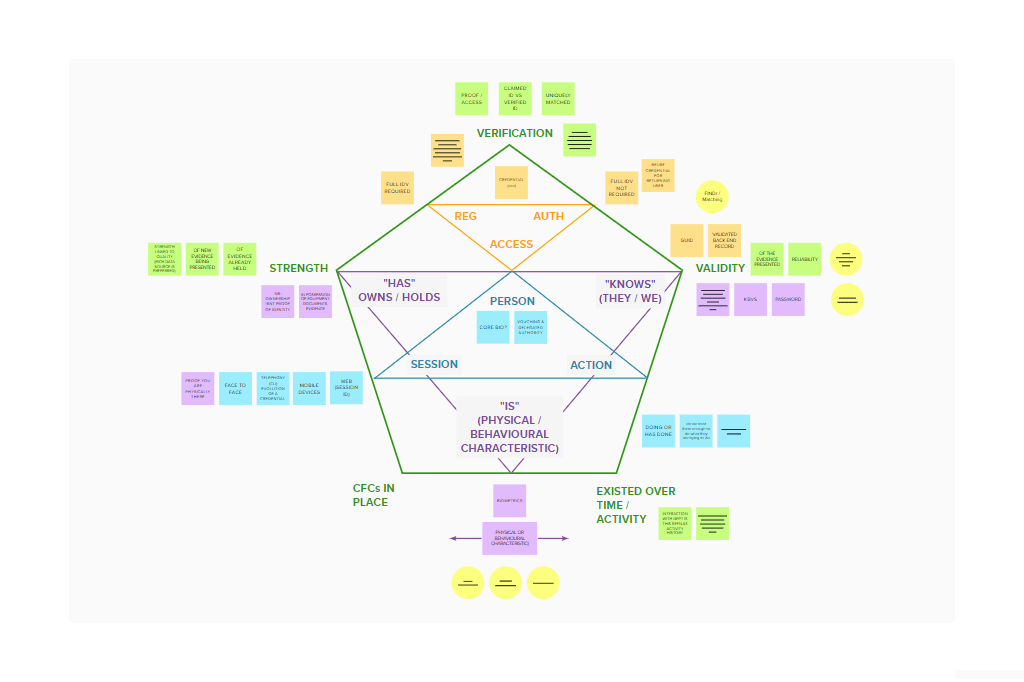

What started to emerge from those early brain dumps, was a series of triangular patterns which reflected the different phases / stages within the identity verification process. These patterns also needed to be encompassed by the 5 key elements of GPG, which, conveniently for me at least, lent itself to a pentagon. The following images were my first attempts to transfer these relatively chaotic scribbles into a usable framework.

However, the five GPG components were still just disparate blocks, or ‘points on my pentagon’ for want of a better phrase. They were linked with one another but they still didn’t illustrate the ‘flex’ that I felt was crucial for telling this particular story.

So whilst these early incarnations were essentially ‘correct’ and helped me to explore these concepts with others. They also started to look, at best, like the wall of a conspiracy theorist. Or, at worst (as someone else put it to me), like some occult iconography.

So I decided I would need to pare things back once more.

How did I go about this?

Contrary to my initial hunch, I shifted my attention back to the scoring system that under pinned the guides.

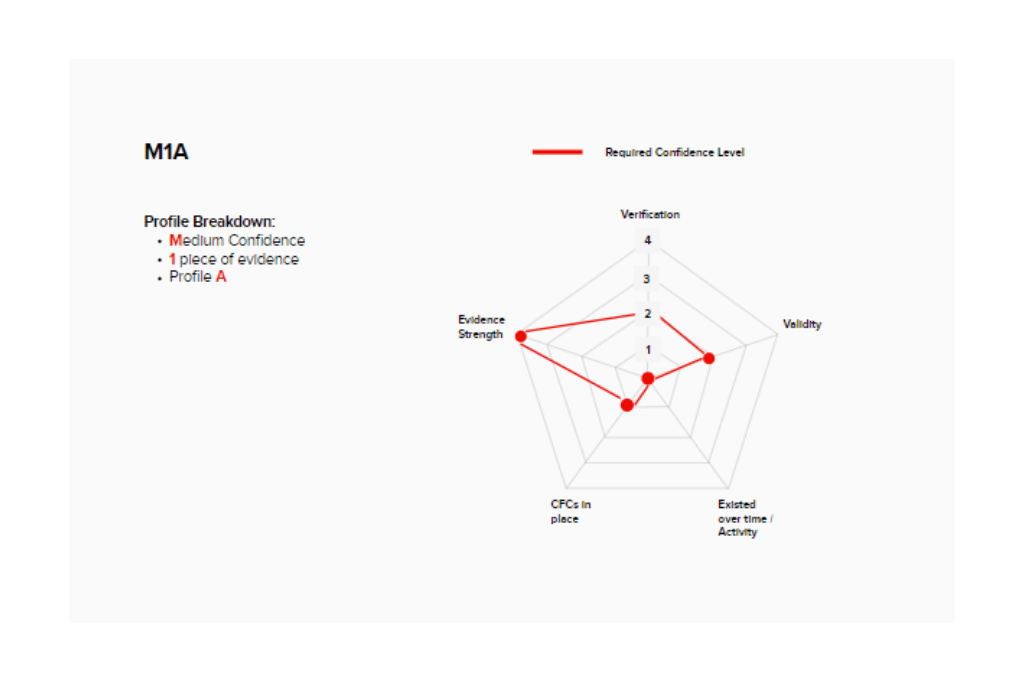

To clarify… each of the 5 elements is scored individually against a profile criteria, these scores are aligned to Evidence Profiles, of which there are 32. If the elements and their respective scores meet a particular profile, they are judged to have met that level of confidence.

But with 32 profiles to choose from, I needed to find a way to show how these scores could not only be achieved but augmented and flex between channels too. Something I’d not seen done effectively up to this point.

My approach

Each profile is made up of the same 3 elements

- Level of Confidence required (Low, Med, High)

- Pieces of evidence presented (1,2,3 etc)

- Profile ID that matches specific GPG scoring thresholds (A,B,C etc)

So for an ID challenge that requires a medium level of confidence. with only one piece of evidence submitted, but which also meets the GPG scores as defined by Profile A, you would be permitted to accept ‘M1A’. I decided to reintroduce my pentagons and represent this information in the following way:

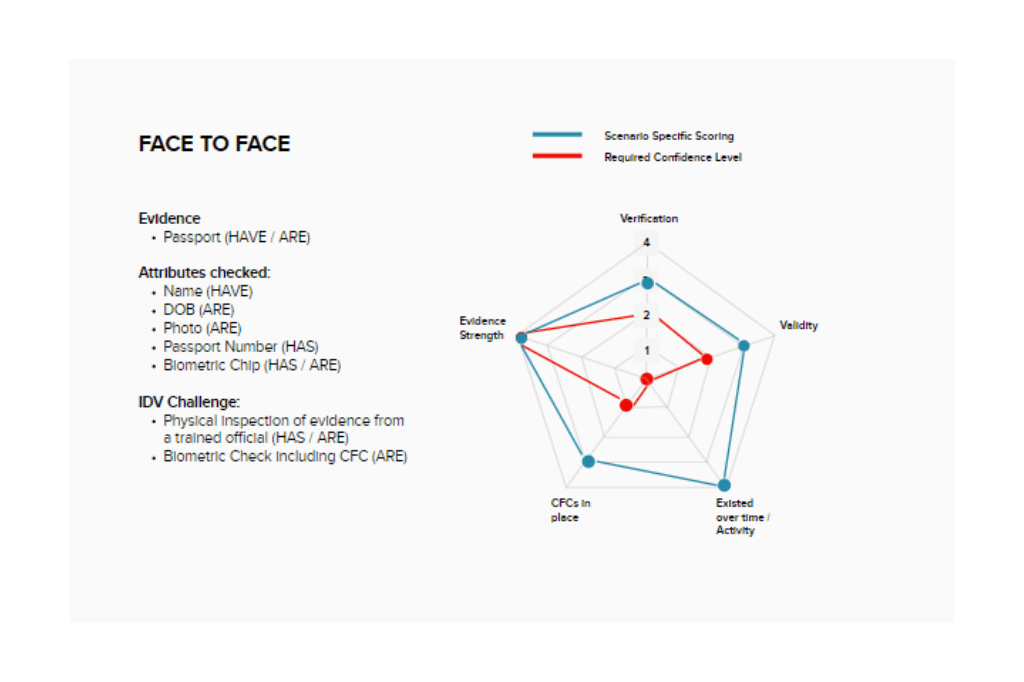

This was essentially the penny dropping moment for me, as I could now discuss M1A as a pattern rather than a score. But to truly see the value of this framework I needed to treat it like a radar diagram and overlay channel specific scenarios too.

In this theoretical example, a citizen is using their passport as their singular piece of evidence and has been challenged in a face to face situation. The relative strength, validity, verification. activity and counter fraud check all score highly, with many exceeding the threshold for the M1A Profile. This is mainly due to the number of ways the evidence can be checked:

- Photo

- Watermarks

- Hologram patches

- Florescent pages

- Bio-metric chip

- Laser perforated numbers

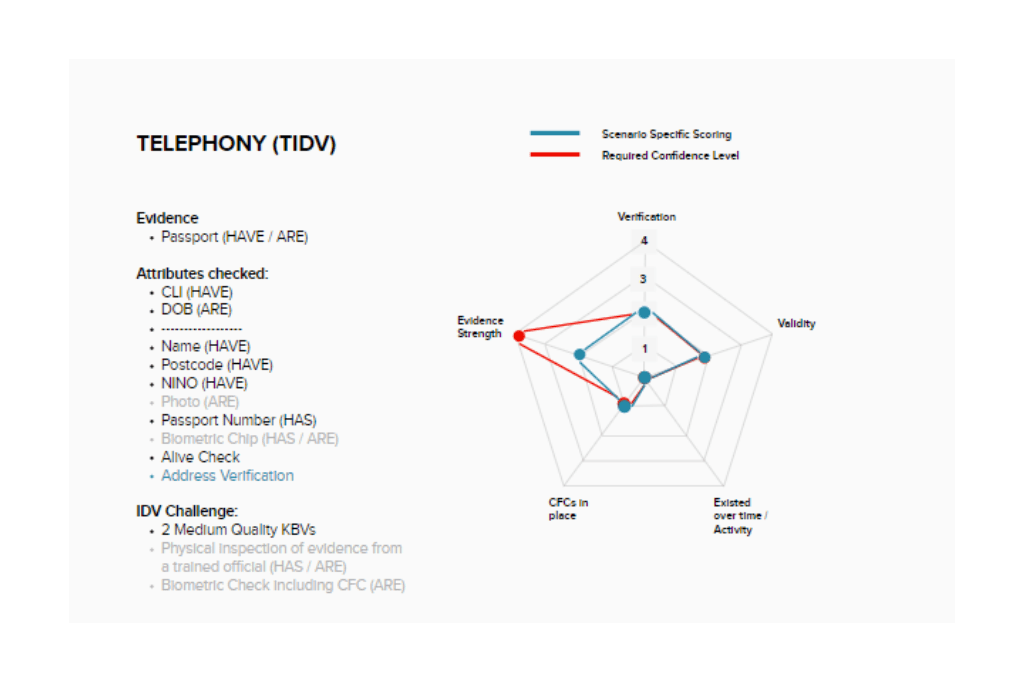

The key point here though, is that a large proportion of these checks can only be carried out in a face to face situation, with a trained official who has access to relevant capabilities, like a bio-metric chip reader. The image below shows what happens when you use the same piece of evidence as part of a telephony challenge.

The 5 key elements of GPG have now ‘flexed’ to form a new pattern, the majority of the elements are now just within the limits ‘M1A’ sets out for verification, validity, activity and counter fraud checks. However, it now falls short in the strength category, moving from a 4 to a 2.

But it’s not the evidence itself that is now weaker, after all it is still a valid UK passport. Rather it is the ways in which that document can be checked via this specific platform (telephony).

The person calling may well be holding that passport in their hands (something they have), and be able to read out the passport number printed on it, but there is no guarantee they are the person the passport was issued to. An agent on the phone will not be able to check their photo or other visual cues either. So in cases such as these, the identity challenge might need to be augmented with some additional knowledge based verification questions (something they know).

There is even an argument, from a product perspective at least, that something like ‘selfie upload’ could be a valuable addition too. However, that would be more of a capabilities discussion, which would invariably bleed into policy, legal, and many other disciplines.

The introduction of this ‘flexible framework’ allowed stakeholders to consider things in a far more visual way. In doing so, they were able to worry less about ‘scores’ and look more closely at ‘best fit’. If a certain pattern stuck out like a sore thumb, it prompted discussions that would perhaps have been overlooked when faced with a wall of numbers.

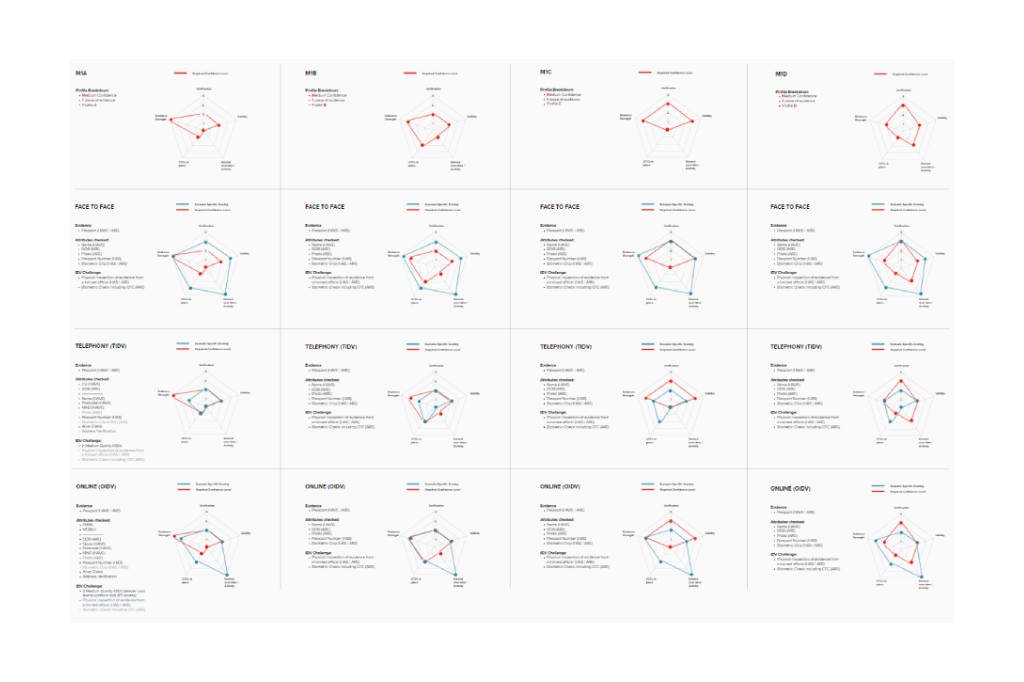

The output

Of the 32 profiles, the image below shows a tiny cross section. Each profile needed to be created through 3 lenses… Face to Face, Telephony and Digital. So 96 radar diagrams in total but hopefully this is enough of an indicator of what the final project would look like. Incidentally, the profiles shown here are M1A, M1B, M1C & M1D

The Reception

I was particularly pleased with how this work was received, as I knew first hand how contentions and open to interpretation this subject matter could be.

It was a welcome touch-point for multiple disciplines and was requested for reuse on a number of Mural boards and decks. It culminated in a nomination for reward and recognition which was later approved by the Senior Leadership Team (see below):

“Steve joined the Policy Team on an EOI and has hit the ground running and made an immediate positive impact. He has taken the time to sharpen and reformat a number of Policy outputs/products, in particular the Policy Risk Assessments which has had a positive impact for stakeholders and colleagues. Steve is also utilising his design skills in preparing some new educational material for use with colleagues in the wider directorate, all of this while learning and upskilling in the policy world. His fresh ideas and approach have been welcomed by the Policy Team. He is a positive role model and his willingness to go the extra mile while learning is commendable”

Nomination Sponsor – ID&T Deputy Director